Video game lighting is a process that adds light to video games in order to create the desired mood. It is an important aspect of the art and design of video games and can help establish a unique style, tone, and atmosphere.

The history of video game lighting can be traced back to the early days at the birth of computer graphics when programmers were still figuring out how to display images on a screen. An interesting twist in how computers display these images was introduced by Arthur Appel. His work with shading led to more realistic lighting effects that would not have been possible without his innovation, more about him later. The earliest games often involved one-dimensional black-and-white graphics, with no color or shading. As computers became more powerful, they were able to develop more complicated graphics with an increased number of colors and shading that made objects appear three-dimensional.

In the 1980s, computer graphics became more advanced and programmers started experimenting with different ways to display light in their games. These experiments led to a new graphical technique called Gouraud shading, where the color of each polygon on the screen was determined by calculating and blending together the colors of vertices. The Gouraud shading model was developed by French engineer Henri Gouraud but introduced to public in 1971, which used mathematical equations to calculate how light should be displayed on an object’s surface based on its position relative to other objects in the scene. Gouraud shading is not a physical simulation of how light is emitted and received by objects; instead, it mimics this process by calculating how light should be illuminated on an object’s surface based on its position. It uses mathematical equations to calculate things like diffuse reflection, specular reflection, and the effects of objects.

I was told other games that used Gouraud shading model to some degree was “Tetris”, “Lode Runner” and “Tron” however I could not verify it completely. However, it is safe to say that games around 1992 used Gouraud Shading model and the game “Descent” used it very well. It is often quoted that Quake used the Gouraud Shading but only partially as they developed surface based lighting due to Gouraud Shading’s limitations, more about that later.

The second is the Phong reflection model, which was developed by French computer scientist Bui Kieu Phong in 1966 and led to improvements of lighting techniques for video games like bump maps in 1982 which was introduced by James Blinn in 1978 and built upon Phong Reflection Model. The Hikari arcade system was first out of the gate with Phong shading. This was important because it allowed them to produce more detailed graphics, especially in their game “Brave Firefighters”.

For the next few years, Sega Hikaru was the only arcade system capable of using Phong shading. This type of shading was featured prominently in games such as “Planet Harriers (2000)”. Sega only ported one Hikaru game to a home console, “Virtual-On Force (2001)” with new 3D graphics. All other games are arcade only because most home systems didn’t implement support for Phong shading until much later, by the time Phong shading was a norm on consoles.

However, the availability of Phong shading in PC games never really took off as it was computationally intensive despite the introduction of the Radeon 9700 graphic card. Doom 3 released in 2004, for instance, applied a less nuanced approximation of Phong shading rather than the true Phong shading; Half-Life 2’s Source Engine did not support Phong shading when it was first released in 2004. Phong shading gained popularity around 2005, with the release of Microsoft’s Xbox 360 and more powerful CPUs. Even today the consoles tend to have specific hardware that supports higher-end shading, including ray tracing.

Since then we have developed Normal mapping and specular lighting, which are used in modern computing. The third is the Lambert model developed by British mathematician Percy John Lambert in 1878 but introduced the principles in his book Photometria as early as 1760. This type of illumination model is used as standard for computer graphics rendering, especially for vector-based modeling and image processing done using raster graphics technology. Computer graphics has been evolving every day with the help of these three types of illumination models. Many other differences exist between these models as well, but I am not going to go technical on you.

For the sake of simplicity, we will consider bump mapping and normal mapping the same. Game developers use bump mapping and normal mapping to update 3D models in-game. Examples are “Half-Life 2” which uses these processes, “The Legend of Zelda: Twilight Princess HD”, and “Assassin’s Creed Odyssey”, to be fair the widespread use of normal mapping occurred around 2003 but it was used prior to this on consoles such as SEGA DREAMCAST or originally on PixelFlow a parallels rendering machine built at a University. Bump Mapping in the simplest sense, bump mapping is a way to make surfaces look more realistic by adding height to them. When a surface is defined in 3D software as a cube with flat faces, it will appear flat without bump maps applied.

When we do lighting we depend on these various techniques and technology for our light to be rendered correctly onto the material. As mentioned earlier, John Carmack’s Quake was the first game to develop lightmapping which we use today to bake our lighting into the texture maps. As 3d graphics hardware that can store multiple textures became more common and creating light maps in real-time became possible, engines began to use light maps as a secondary texture. Lightmaps can also be calculated in real-time to produce high-quality colored lighting using mixed. This means there’s no need for the defects caused by Gouraud shading since lightmaps are calculated in real-time. However, even with advancements in real-time ray-tracing technology, it is currently too slow to rely on them for shadow creation in most games so we would still use shadow mapping where possible. Shadow mapping is a technique that was introduced in 1978 by Lance Williams. In his paper, he discusses the concept of “casting curved shadows on curved surfaces”. If you pay attention over the years you will notice that there is a connection between the development of these techniques and when they were used together to solve complex issues at the time.

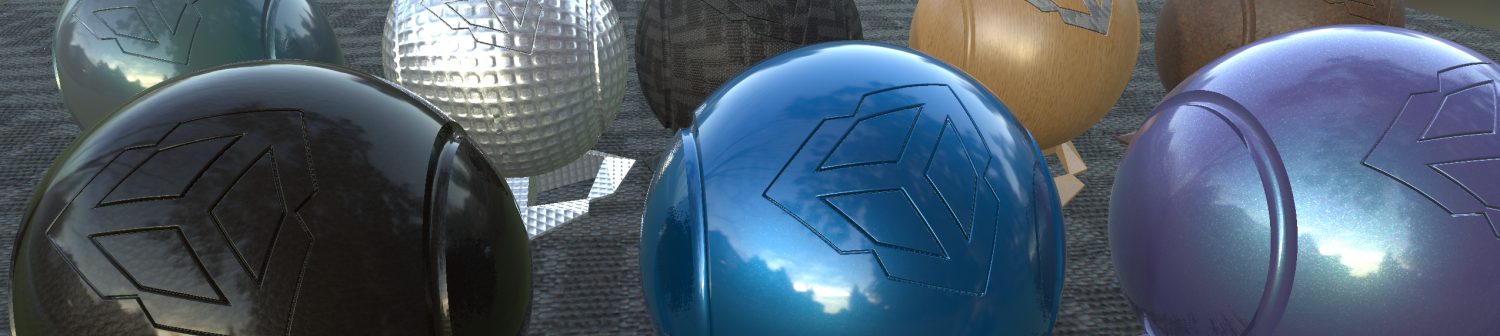

Let us not forget that physics-based rendering materials. This has been used in film for a long time, but it wasn’t until 2007 that they came to 3D. This approach is now very common and is used by most 3D, art professionals and AAA games. We use this approach in real-time engines such as Unreal Engine, Unity Engine, UniEgine and CryEngine. Starting in the 1980s, a number of rendering researchers worked on the theory at Cornell University, there is a paper around 1997 that describes this lab research. It uses normal map too as mentioned earlier so most of the technology is either an extension, improvement or connected to each other in order to create the desired result.

Like many things in our world someone then popularize things, in this case it was Matt Pharr, Greg Humphreys, and Pat Hanrahan in their book of similar name around 2014 that popularized the term Physically Based Rendering also known as PBR. A PBR material is a virtual pipeline to simulate any kind of physical material. Pro’s include the ability to make any kind of physical material, which can be especially beneficial for 3D models to be represented accurately when light hits the material.The texture has parameters such color, metalness and roughness/specular. PBR textures rely on the micro-facet theory from the early 80s, which states that a surface can be made to look rough or shiny. The orientation of these tiny surfaces will reflect light in a different way and this can be observed at larger scales today. One of the key is how data is organized to allow all of these models to be merged into a single one, while allowing us to control each of the layers individually to our liking.

Just like lighting advancing into global illumination, ray tracing, path tracing and so on there has been advancement with PBR called AxF which is very complicated mathematically accurate version of PBR.

With advancements in real-time ray-tracing technology and its ability to improve the visual quality of the game at the cost of processing power, many developers are still deciding to keep this feature turned off. It doesn’t stop people from upgrading or modifying older games to use raytracing to some degree. The most notable game to be using this feature with the help of community modification is “The Elder Scrolls V: Skyrim” and other games that use it include “Fallout 4“. However, as with most inventions it has been known for a long time and invented prior to hardware technology allowing us to use it more. Albrecht Dürer is credited with inventing the idea of ray tracing in the 16th century. His writings on this subject are documented in Four Books on Measurement. Achieving this using a computer to generate shaded pictures using ray tracing was first done by Arthur Appel in 1968.

Pros and cons of real-time ray-tracing technology compared to light mapping that bakes lighting into the texture map are many. An example is in order to light a 3D scene realistically, the rays of light that hit an object must be traced in order to calculate accurate illumination. Calculating real-time ray tracing is very taxing on computer hardware. Light mapping is a technique that renders lighting into the texture map, which takes fewer resources and renders faster than real-time ray tracing. However, if there are many objects of varying complexity or opacity in the scene, this might not be true for all scenarios. However, games such as Minecraft allows raytracing and combining the techniques mentioned above looks completely different than it did originally.

The number of interactive GPU-rendered visuals keeps expanding quickly. However, there are several aspects of lighting that can’t be simulated with current techniques. One thing that graphics cards struggle with is rendering high-quality lighting effects. Your graphics card probably can’t realistically show the light bouncing off objects in the scene because it’s not an actual light source. One way to do this is through ray tracing, which allows for a global perspective of the scene that is not physically possible with current hardware, at least not perfectly. In order to actually use ray tracing in production, a number of techniques would need to be implemented first. As of today there are numerous challenges that comes with using Ray Tracing and it would take some more time and development before it becomes a widespread implementation in video game lighting, the same is true for using Lumens in Unreal Engine hundred percent.

Overall we are progressing quickly in our technology but the hardware still needs to keep up with the expensive volumetric, shadows, and transparency cost that comes with high-fidelity lighting. That is not to say games are not pushing and developing tech to overcome it such as Horizon Dawn, Gods of War, and so on.

That is it for now. I hope you enjoyed the random post and learned something.

Disclaimer: We do not own the images above as they were taken from the internet to populate the article so we take not credit over it. And the information provided here is from several articles, Wikipedia and books in which we tried linking most in the article.

[learn_press_profile]